04-07-2019

Apache Spark: How to create an efficient distributed processing?

On the 30th of May, XTech Community promoted a hands-on “Spark Intro & Beyond” meetup. The presentation kicked in with the introduction of Apache Spark, a parallel distributed processing framework, that has been one of the most active Big Data projects over the last years, considering both its usage and all the contributions made by its open-source community.

Spark’s Functionalities

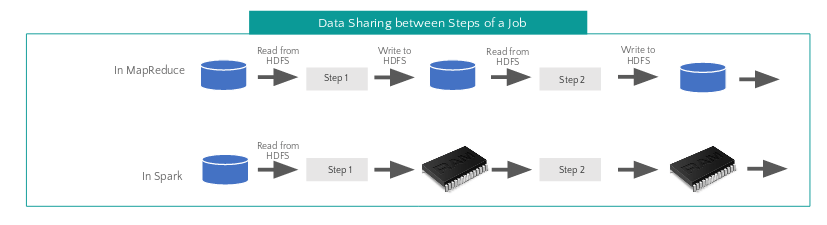

In comparison to Hadoop MapReduce, the main advantages of Spark revolve around its facility to write jobs with multiple steps, through its functional programming API, as well as the ability to store intermediate data in-memory.

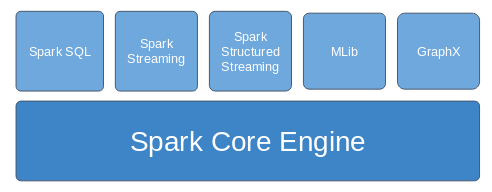

The functionalities offered by Spark were introduced with the help of its built-in libraries, implemented over the Spark Core Engine, and by the possibility to use external libraries available in the Spark Packages repository.

Spark Core Concepts

After the introduction, the focus of the presentation shifted towards the important Spark Core concepts. We learnt:

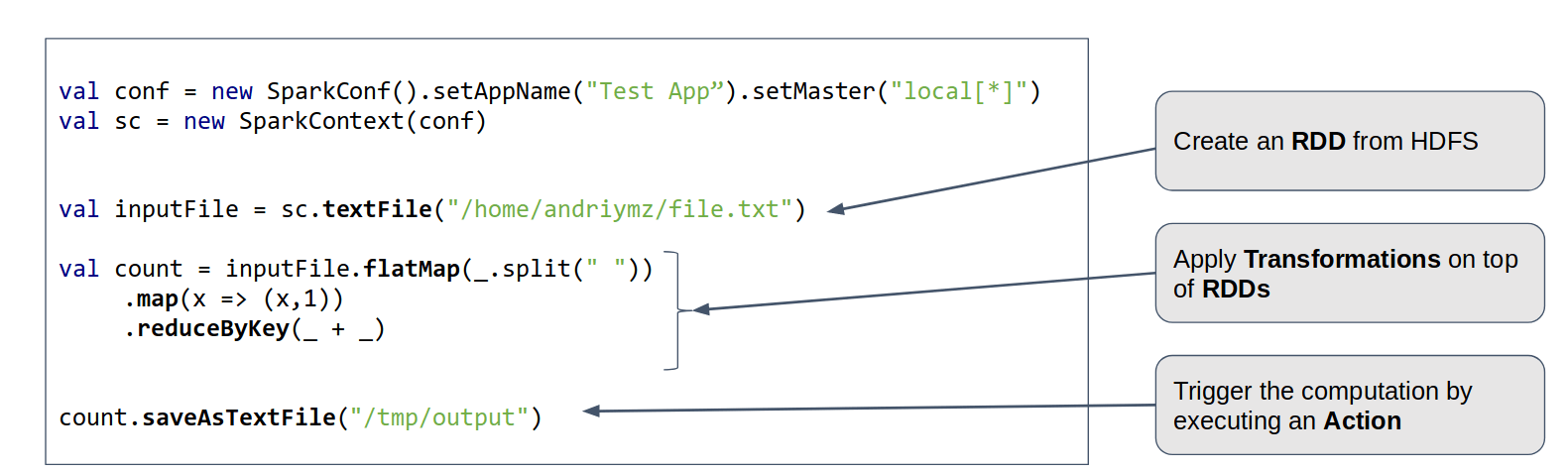

- what is comprised within the Driver program, written by the developers;

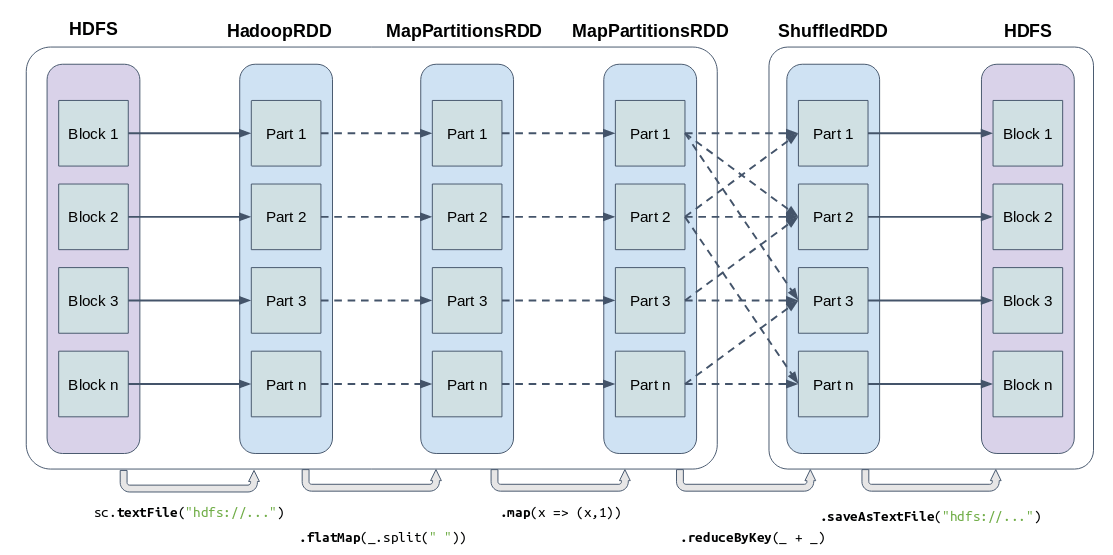

- the RDD abstraction that allows the parallelization of operations over the distributed datasets;

- the types of operations applicable to RDDs: actions e transformations;

A simple word count in Spark was used to demonstrate the ease of the writing process for a first job, as well as all the components involved.

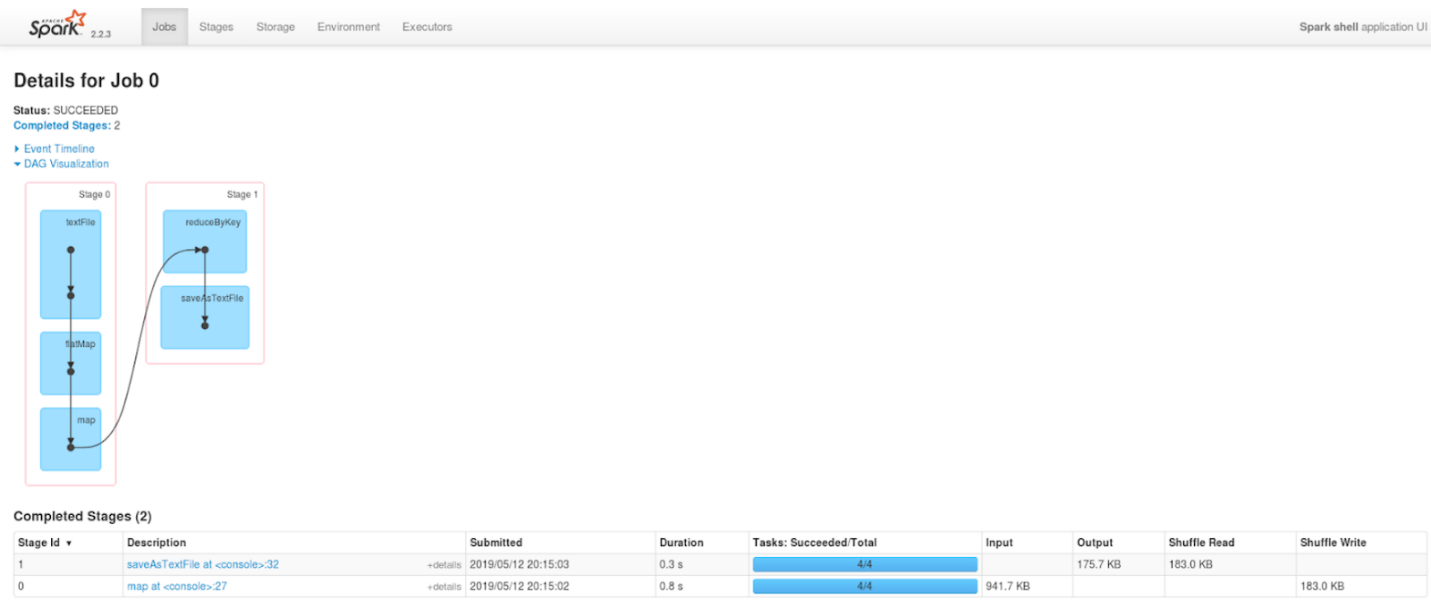

By presenting the execution result of the word count in Spark UI, a web interface available during the Spark application execution that allows its monitoring, we explained the meaning behind the physical execution units in which a Spark application is divided: Job, Stage and Task.

Partitioning

The next topic of the Spark Intro & Beyond Meetup was Spark’s partitioning, in which the number of RDD partitions directly depends on source’s data partitioning and other possible parameters.

Understanding how the partitioning process works is crucial, since the number of partitions of an RDD determines the execution parallelism between distributed operations, and its control allows the developer to write more efficient applications and better use the cluster’s physical resources.

We went over four different techniques to increase the execution parallelism:

- through the 2nd parameter of sc.textFile(“hdfs://…”, 120) function;

- by increasing the number of read partitions of the source Kafka topic;

- through the 2nd parameter of wide transformations, e.g. rdd.reduceByKey(_ + _, 100);

- through rdd.repartition(120) function.

Reduce Shuffle Data

Finally, we showed 3 techniques that minimize the quantity of data sent between two Stages of a single Job, called shuffle data, and help improving the application’s performance.

Shuffles in Spark are extremely expensive, because data is sent over the network between Spark Executors that reside in different cluster nodes, or written and read by Executors that reside on the same node.

The techniques presented to decrease shuffle data were:

- Use of operations with pre-aggregation, e.g. reduceByKey vs groupByKey;

- Applying operations over previously partitioned and cached RDDs;

- Broadcast Joins.

Conclusions

In the end, besides clarifying some doubts regarding the presentation, we debated over several relevant topics, such as:

- Data locality and the integration of Spark in Hadoop clusters;

- Scenarios in which applying Spark processing makes sense;

- Possible causes that may increase a Spark Job’s execution time, and how to troubleshoot them through the Spark UI.